Bringing a Client’s Dreams to Life, Using AI

Felicia, thank you for participating in this interview! Can you tell us more about the history of this project? How did it come about?

Audrey and I worked together on a completely unrelated project connected with her day job back in 2013. When she began cataloging her mother’s archive a few years later and realized she needed professional photography, she reached out to me. At that time, Audrey’s memoir project was in its infancy. We photographed many paintings over several sessions, hundreds of paintings if I remember correctly.

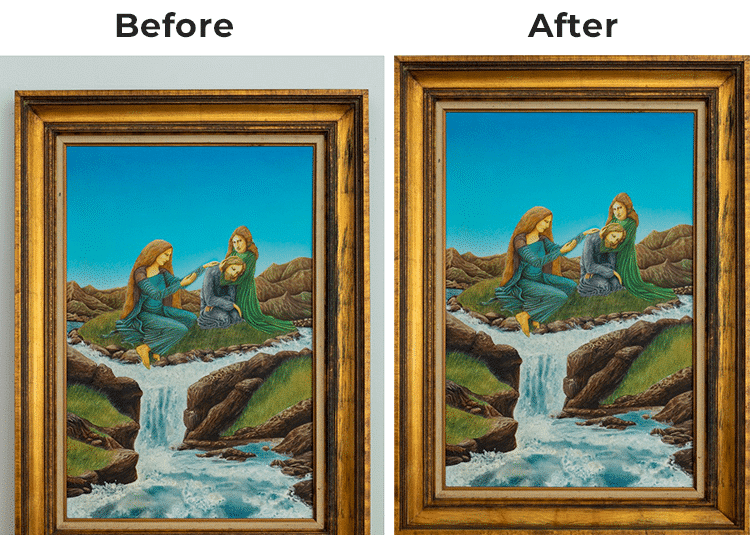

We were concerned with the paintings themselves, the canvas works, and paid little attention to the frames. In many cases, I composed my photos to include the whole painting AND frame so that I’d have ultimate flexibility in post production cropping and aspect ratios. I did NOT include the entirety of every frame and often times we didn’t even remove the foam frame bumpers that had been protecting the works in storage.

Fast forward to 2023. After years of working closely with her mother’s works, Audrey decided that her upcoming books really should include some photos that include the (often very ornate) frames. Unfortunately we didn’t foresee the evolution of the memoir when we did our original photo shoots. I did not have the entirety of many of the frames she wanted. This is where some creative thinking and new technology came into play.

How did you come to the solution of using AI while working with Audrey on this project?

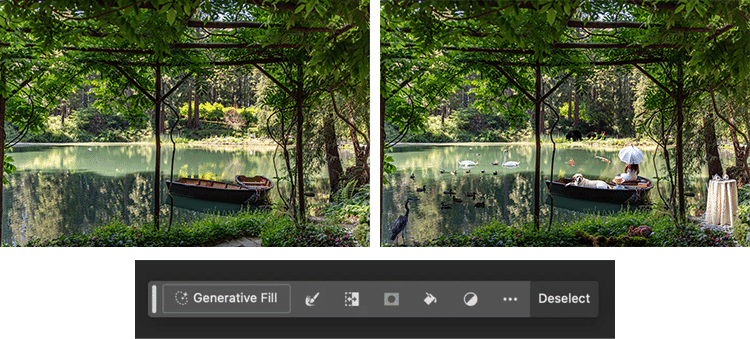

I wanted to be able to please my client, fulfill her needs, and come out a hero! I’ve been following Adobe’s AI feature announcements and had played a little bit with the Generative Fill feature. I had read about the Generative Expand feature as well and my reading plus my experience with Generative Fill lead me to believe I could possibly recreate frame parts using AI.

Here are some of my early explorations:

I floated the idea to Audrey, wanting to be fully transparent with my idea. The risk was minimal. At worst, it wouldn’t work, we’d have wasted a little bit of time and money, and she’d still be missing the images with frames. At best, it would work well and she’d get what she wanted. She was on board to give it a try!

What kind of limitations did you experience when using AI in Photoshop and/or Lightroom? How did you work through them?

I encountered limitations in both Lightroom and in Photoshop. Lightroom wasn’t actually involved in the process at all, it could have been completely skipped EXCEPT I use LR for organizing my images and I had applied edits to the RAW photos in LR.

To open images directly in Photoshop, I needed to find them on my hard drive without the use of LR (not all that hard for me actually because I have a good asset management system) and due to some quirk that I assume comes from the Beta nature of Photoshop, they opened without their edits applied, even though their corresponding sidecar (xmp) file was available.

Opening directly from LR was tricky too. First off, LR only allows one version of Photoshop to be listed as External Editor at a time, so I had to change my preferences to allow Photoshop Beta vs. Photoshop. Then there was another software glitch. It would take FOREVER to open the files and would give me an incomprehensible error messages in the process. If I cleared all those messages and was very patient, the files did open in PS Beta eventually. I overcame this by multi-tasking! I’d start the opening process for a handful of files, then move to emails, and so on. Circle back to opening process. Again and again.

Once the files were actually launched in Photoshop Beta, the work wasn’t too hard, but it was time consuming and a bit finicky. For most of the images, I used Generative Expand to expand the canvas past the missing frame edges. The software took care of building the frames themselves. PS Beta would serve up 3 options and I’d flip through those to find the most believable frame. In some cases it did not work and I let Audrey know it wasn’t possible with that frame. In others, it was so believable it was creepy. In some, I had to use my other Photoshop knowledge to refine the AI frames, using cloning, masking, and other tools. Sometimes I had to run Generative Fill as well as Generative Expand (for example on the frames with foam bumpers in some spots).

This must’ve saved you a lot of time—can you compare your typical editing process vs. when youire able to use AI?

While this did save me time, it wasn’t the blink of an eye. First off, I doubt I would have taken on this project without AI. I likely would have told Audrey that unfortunately we didn’t think of all scenarios when we did the original photo session years ago. I would have offered her a new photo shoot. That would have cost her time and much more money, and realistically wouldn’t have been feasible with my end-of-busy-season calendar as I’m in my last hard push of the year and have a packed schedule. Waiting on me to get her into the shoot rotation would have meant delaying her book AND going over budget.

I’m not a professional retoucher. I know how to do it, but since it isn’t my main thing I am slow and probably not up to date on all the tricks. Because I don’t have that dedicated experience poured into retouching, I’m also not always able to get a perfect result. Passable but not epic. Consider this analogy: I know how to change my oil. It takes me a full day because I do it so rarely and it usually makes a mess. A mechanic changes oil every day. They do it in a blink of an eye and can probably do it in their sleep. Outsourcing makes more sense (for time and for money and for frustration, as well as for the final result). Retouching is the same…I prefer to outsource.

If I had attempted to generate missing frame parts manually, it would definitely have taken more time. I averaged about four AI completions an hour (not calculating wait time for the software glitches) and I’d guess each frame would have taken me about an hour to get right.

I offered up the AI option because I wanted to try to fulfill a client need and because I was curious to see what AI could do. If it had flopped, I doubt I would have charged her for my experiment. It was a learning experience for me and as a professional I always need to stay abreast of current tech.

To circle back to the actual question, how would I compare the manual process vs the AI process? Again, knowing I’m not truly a retoucher, so someone else might have a better workflow… I would have created a new layer (several layers likely) so that my work was not on my background (ie. non-destructive editing). I would have changed my canvas size to accommodate the edges I was planning to build. I would have used a combination of selecting/copy/pasting/transforming parts of the image and clone stamp to fill in the missing areas. There would have been many selection tools and masking techniques in action as well. None of it is super complex but it’s all finicky and it is so easy to leave digital artifacts that give away your work.

The finished images will be incorporated into further print and online collections, enabling people to learn more about the artist Niki Broyles’s amazing body of work.

Read more about Niki Broyles’ visionary artwork on her website and in her memoir, Mystery of the Mother, written by her daughter Audrey Broyles.

In what ways do you think AI is or will become useful in photography? Do you see many photographers currently embracing it?

I think AI is useful but that it isn’t perfect (at this time). I “embraced” it before this particular project ever began, but pivoting some of my efforts as soon as AI tech became common. For example, straightforward e-commerce photography (white background, boring light) is something I feel could be done by AI (now or in the near future). Many years ago this was my bread and butter.

I have a few clients hanging on in this genre, but I’m never going to be able to compete with AI on price…nor do I want to. I won’t be pursuing these clients in the future. Another example is LinkedIn portraits. Lots of people want a good looking headshot for their resume, it has never been a high budget genre but it has been good calendar filler for me. $200 got my client 15 mins and 5 photos and I barely covered expenses at that price. Now you can get a huge selection of AI portraits for like $40. Even if you do have 6 fingers and an extra elbow in half of them, I’ll never beat that price.

AI has already been in use for Culling (in external programs) and Local Editing in Lightroom to great success. I love the efficiency of passing those tasks off. In the past, perhaps I would have paid an assistant to cull my images. I’ve never felt I had the budget for that. AI Culling is more affordable. Maybe not as good, but now within the budget.

AI will possibly weed out some less skilled photographers, but if you are good at business, work hard to please your client, and deliver a result that is superior to AI there will still be plenty of work. A CEO is still going to need a better portrait session than an AI headshot. Weddings and events can’t shoot themselves. There is a lot of room for AI and for photographers in this world.

What kind of ethical issues do you think photographers should keep in mind when using AI?

It is important to be upfront about the tools you are using if you are in a “truth” setting (think news photography, product photography, food photography, real estate photography). It would be ethically wrong to make something out of nothing in a documentary photo for example.

If you lean toward art over truth, I don’t personally see an issue with it. You aren’t trying to sell reality.

An additional ethical issue is how the AI machines were trained. Many photographers feel that feeding their works to AI to train the software was stealing their hard work without pay or acknowledgement. I don’t know enough about it at this time to take a hard stance in one direction or another.

Lastly, if you are using AI for client work, it isn’t a bad idea to get client consent. I told Audrey outright that I was going to try AI. I did this firstly to temper expectations… it may not have worked. I also did it to give her an opportunity to object. If she had personal feelings toward AI she might have told me not to do it.

Do you have any advice or words of caution for students who may want to use AI in their photography work?

Photography is a competitive market. Do anything you can to stay current. Whether or not you choose to use AI in your own work, you should play with it. See how the technology works. Brainstorm ways it could help or hurt your own business. And for sure, keep informed of current news, discussions, and controversies around it.

For more information about Niki Broyles’ visionary artwork, visit her website at Mystery of the Mother. For more perspectives on creative uses of AI at Sessions, read What Photoshop’s new AI Tools Mean for the Creative Community. For information on our online digital photography degree and certificate programs, visit our photography majors page.

Lauren Hernandez is the Manager, Faculty and Curriculum Development at Sessions College. Lauren is an artist, educator, and former middle-school teacher with a passion for everything art-related. Outside of work, Lauren likes to take care of her plants and hang out with her bird.

What Photoshop’s new AI Tools Mean for the Creative Community

What Photoshop’s new AI Tools Mean for the Creative Community

Gaining Confidence and Working with Clients

Gaining Confidence and Working with Clients How to Stand Out in UX Design

How to Stand Out in UX Design